The Problem

Never before in human history could we use English to ask a computer to do general purpose things until now with AI (ChatGPT, LLM, etc)

However if you ask it to do something new it often doesn’t follow the rules!

AI is good at transforming (the T in GPT) text from one form to another.

But if you try to give it rules it will fail to follow them reliably.

The dark ages

We tried numerous things trying to bring accuracy and reliability up

- Rewriting our instructions (prompt engineering) to get it to behave

- Saying it will die if it doesn’t follow key rules! (EmotionPrompt paper)

- Asking it to check its work and try again every time (self-verification papers)

- Creating a team of bots to work together (brief experiment with GPT Researcher)

- ..

Each had incremental but diminishing gains, but the accuracy was not acceptable for our film-research purposes.

Transformers, robots in disguise

AI seems like it’s capable of Logic but I suspect it’s just lots of transformations of its internal representations of concepts (neural net weights) and any provided to it (the ‘prompt’).

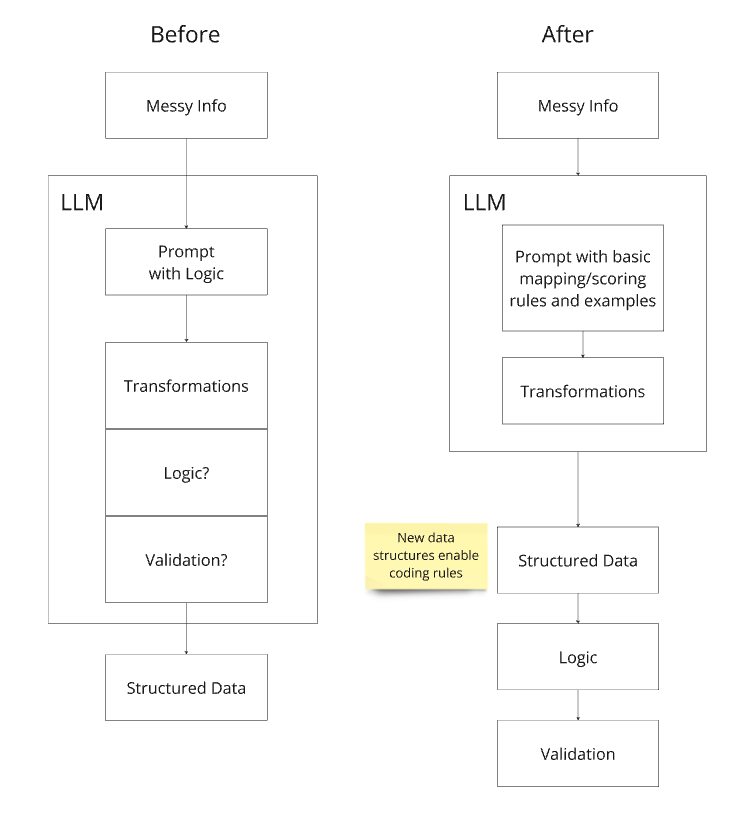

Getting business logic out of the prompts and limiting them purely to transformations, then putting logic in code has made a huge difference to our sanity, with improvements in:

- Accuracy

- Testability

- Prompt volatility (less butterfly effects of prompt changes causing large unexpected failures)

Show, don’t tell

Like a toddler, AI loves to be shown examples (few-shot in-context learning)

So any time it misbehaves or does good, we take the data and turn them into examples to use next time.

Taming the complexity

Data flows in and out of code/LLMs in our setup, so errors can compound and there’s plenty to go wrong. This is why we weren’t impressed with hyped team-of-bots (agentic) approaches.

Keeping code and prompts close together, we have built tight unit and integration prompt (Jest) tests that run automatically (Github Actions) across our pipeline, if (and only if) the prompts or code related to them change.

This means we can:

- Understand overall accuracy increase/decrease

- Swap in new AI models and see effects on performance

- Make code changes and do releases with confidence

Wrapping up

This diagram outlines what we were doing the painful way, and what we look like now

We have a large number of these running in a pipeline alongside regular API calls and logic

What are we building?

We are building an AI Researcher to super-charge the Development Process :

- Identifying potential industry/subject rumours and risks for ideas

- Finding direct and representation contact details

- Develop the pitch and story opportunities